I am a member of a development team that has nearly completed the task of replacing Apache Solr with Elasticsearch for our web application. This case study is a recounting of the methods used and challenges faced during the experience. Read on to learn why and how we pulled it off.

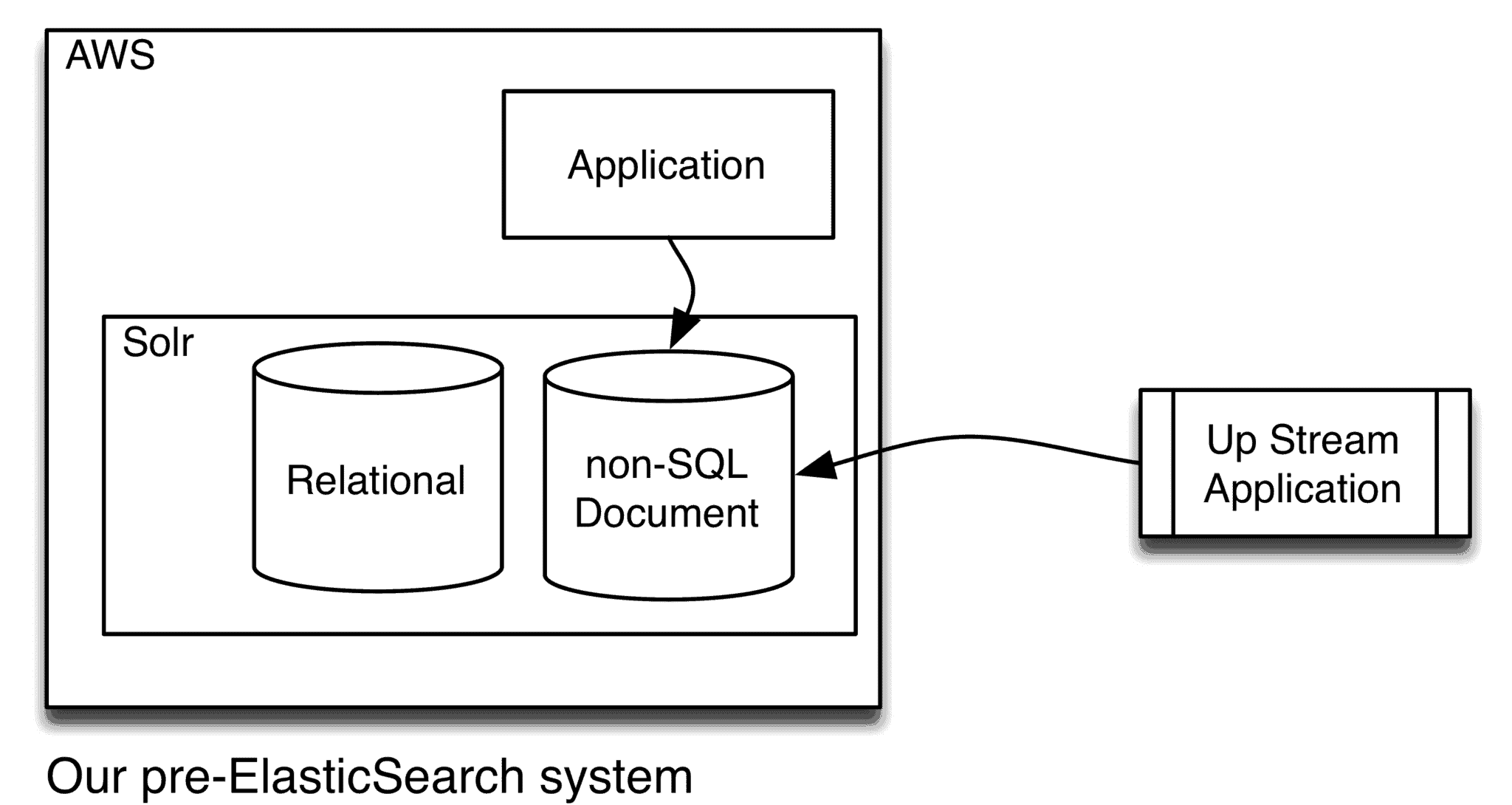

Our web application uses a relational database as well as a non-SQL document storage database. The content in the non-SQL database is derived from two different applications: our own, and an upstream application that provides data to our system. The combination of the content is indexed to be searched from our application. Also, the web application and its databases are hosted in the cloud using Amazon Web Services (AWS).

Enter Elasticsearch

There were design flaws in how the indexed data in our non-SQL database was organized and maintained. These flaws led to poor search performance and difficult maintenance processes. The team decided that a full redesign was required. Since a redesign is already a major disruption, it is also an opportunity to change the underlying non-SQL database technology. Elasticsearch was selected as the target platform because it meets our needs for storage, indexing, and maintainability. Also, Elasticsearch is directly supported as a service in AWS, while Solr is not offered as a supported service.

These ideas guided our team in our goal to replace Solr with Elasticsearch:

- The migration must be gradual. If there is a problem with the data, platform, or the new design, it is better if the scope of the problem is limited, rather than impacting all areas of the application.

- Data integrity must be verifiable. It is important to have confidence that no data has gone missing and no application features are broken.

- Performance improvement must be measurable. Only by measuring the performance of both the old solution and the new solution will there be any proof of improvement.

- Indexed data must be ephemeral and dynamic. The data stored and indexed in Elasticsearch is second-hand data, thus needs to be discardable and rebuildable on demand. The primary data reside in our application’s relational database and the upstream application’s databases.

The following summarizes the steps we took towards achieving our goals.

Create test Elasticsearch instances

A new Elasticsearch instance was created using the Amazon Elasticsearch Service for each of our test environments. Developers also installed a standalone Elasticsearch instance on their development computers.

Abstract any Solr-specific application code

All of the Solr related code in the application had to be gathered into a concise “search index” interface that was not specific to Solr.

Create Elasticsearch alternative implementation

A second implementation of the “search index” interface that works with Elasticsearch was written. At the same time, integration tests for the interface were written in order to demonstrate that the Elasticsearch implementation behaved the same as the Solr implementation.

Acquire data from upstream system

A feature was added that queries an external dependency and indexes all of the data on Elasticsearch. This feature meets the need for the indexed data to be rebuildable on demand. At full scale, the amount of data is in the tens of gigabytes. Therefore, the feature was implemented as an asynchronous task that runs on a scheduled basis, and can also be initiated on demand.

Enable multiple deployment configurations

Throughout the development process, the application needed to continue to be usable with the older Solr index. Multiple deployment configurations were set up to allow an incremental release of Elasticsearch and replacement of Solr. The configurations are:

- Use Solr only

- Write to both, but read from Solr only

- Write to both, read from Solr in some areas of the application, and read from Elasticsearch in others

- Write to both, read from Elasticsearch only

- Use Elasticsearch only

In the configurations where both Elasticsearch and Solr are written to, the application needs to be able to detect and handle partial write failures. A partial write failure is when data is successfully written to one database, but not successfully written to the other.

Automate data consistency validation

We added a nightly job that compares the data stored in Elasticsearch, Solr, and our relational database. If any inconsistency is detected, an email is sent to the development team.

Victory

At the present, all of the steps are complete. The released version of the application is currently configured to read from Solr in some areas of the application and from Elasticsearch in others. Having only some parts of the application take advantage of the new database allows us to limit the impact of any unintended bugs, and also allows side-by-side comparison of the improved performance.

Challenges along the way

There were some stumbles on the path getting to this point. One challenge was ensuring that the database remained consistent while being updated from multiple application servers. Elasticsearch offers external versioning as a method of optimistic locking when the data comes from an external source. The external versioning strategy worked for our use case.

A large volume of data needing to be processed posed another problem. Anytime there was an issue rebuilding or validating the index, attempts to reproduce the issue with the complete data set would take too long. We had to rely on careful code review and integration tests that created a limited amount of data in order to ensure that the design was bug-free.

Incorrectly detecting errors during bulk operations on Elasticsearch was a mistake that went unnoticed while testing with small datasets. The bulk API operation response contains an array of results for each individual document updated in the bulk operation. To determine which documents were not able to be updated during a bulk operation, the status of each individual result should be used rather than checking the overall HTTP response code from the request.

Upon reflection, the biggest challenge we faced was keeping the Elasticsearch index up to date with data from a system not under our control. There is no mechanism for easily identifying what data from that upstream application has changed over a period of time. We had to resort to polling and implementing data reconciliation logic in our data ingest. Ideally, the upstream application would have offered an API that indicates a record of changes over a period of time. In that case, the state could be maintained and only the necessary documents get updated.

Conclusion

The approach we took turned out to be sound and we were able to successfully deliver a more usable system for our client and users. So far, everyone is happy with the new architecture and the benefits it is delivering.