Ever wake up in the middle of the night wondering if your server is still working because the pings are OK, but you are getting connection timeouts and JAVA IdleConnectionReaper exceptions? This blog post will talk about resource pooling in AWS with respect to Long Polling && Connection Reaping. There are often some very technical road bumps when coming up to speed on any system, and I hope this blog entry can help some readers.

The Issue

Late one night, our systems started to fail while processing some data. Our “heartbeat” pings (API calls) were taking more than 60 seconds to respond, but the system was “up.” Thus, our monitoring system was spamming us about critical failures when, in fact, things were just taking a VERY LONG time to process.

What is Connection Reaping?

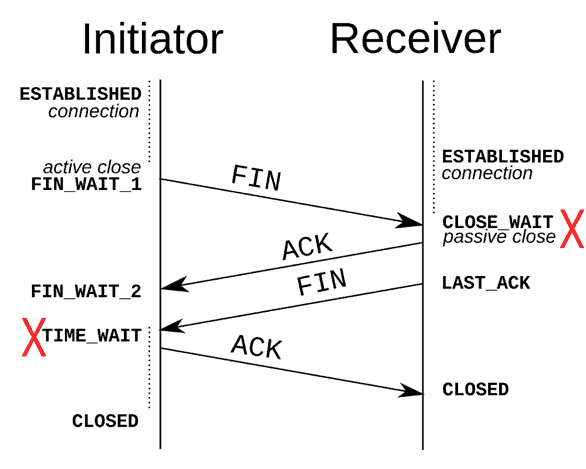

Usually, Connection Reaping involves taking anything that is waiting for a response and killing the connection. AWS specifically goes after “CLOSE_WAIT” while most routers kill on “TIME_WAIT”. When AWS does in fact reap a connection, it will throw an IdleConnectionReaper exception in your application.

Depending on your application retry-logic, this can break everything!

It makes sense for AWS (or any leading cloud provider) to add this functionality for basic applications. After all, enough hung or waiting connections have the same effect as suffering a DDoS, where OS fails to spawn new sockets.

What this means for you

The problem of seemingly persistent connections going away isn’t something unsolved. Long gone are the days of UDP for the most part – all hail TCP. This is what made TCP so great – reliable connections. Trying to code around a TCP system that now responds like UDP can cause some frustrations and erratic application behavior.

It FORCES you to re-think your long polling strategy and (probably) graceful recovery.

How to Solve it

For any given application there are 2 solutions.

- Change the behavior of the AWS SDK HTTPConnection to disallow connection reaping. [Ref 2]

- Change the behavior of your Application to accommodate IdleConnectionReaper exceptions. This will usually result in implementing very specific re-try logic. Only YOU can know what it is important in your code. Re-think your connection recovery logic/layer. If you are concerned with this problem, a simple try/catch will not be enough to coerce a solution! (But I hope for your sake!)

Since thread pool management is handled by the AWS SDK, it would be more ideal for AWS to address how its SDK recycles connection threads (as opposed to us hacking at it.).

In Closing

Connection reaping will never go away while your applications are in the Cloud. In a multi-tenant hosting environment, the consequence of not handling stale connections is equivalent to a DDos attack on all tenants – creating a complete stoppage of traffic. After all, Cloud providers have to be manage their network to give every customer a reliable experience. Fixing this problem wouldn’t be easy, because every solution will be custom. In hindsight, the easiest solution would be to move your applications off the Cloud, but then you would first give up all the benefits Cloud offers and still have to take on the burden of managing your own thread pool and stale connections. A real robust fix, though tedious, is to get back into your code and fix the retry logic:

Anticipate connection errors, learn to capture them, evaluate symptoms and the causes, and then update connection retry timing accordingly. Do not take a persistent connection for granted.

References:

http://docs.aws.amazon.com/AWSJavaSDK/latest/javadoc/com/amazonaws/http/IdleConnectionReaper.html