This is part two of a multipart series about Industrial IoT (IIoT). Part one explains how IoT fits into an Enterprise environment and explains what IIoT is.

“An update killed my coffeemaker!”

We are surrounded by software and devices that update constantly. Software as a service (SaaS), platform as a service (PaaS), mobile apps, and even subscription-licensed desktop software are all evergreen. This means that customers and users are expecting the bug fixes to be swift and demand that new features be delivered near-constantly. However, delivering a buggy release can have a huge impact on the installed customer base and rollbacks may be difficult or impossible.

That same set of expectations now also applies to the software in hardware devices, particularly ones that are internet-connected. The impact of a bad release can be catastrophic for hardware; a repair may involve a trained technician, or the device could become unsafe and harm the users or those around them. Imagine the impact of a bug in a software update of a self-driving vehicle. Even a software bug in something as simple as a coffeemaker could become a lethal fire hazard, not to mention the threat of caffeine withdrawals.

And of course there is security to consider. A flaw in the software of a self-driving bus is one thing, but someone malicious taking over a self-driving bus sounds like a sequel to a Hollywood action film.

Quick Review of Traditional CI/CD Practices

Continuously updating cloud-based software without crashes and outages requires a set of disciplines that bake-in reliability, stability, and consistency. The core disciplines that are the culmination of decades of development management are called continuous integration and continuous delivery, and since they go hand-in-hand they are often referred to as CI/CD.

These disciplines of CI/CD as well as much of the technologies and processes that have been developed recently for cloud-based deployment can be applied to IoT as well. For example, many of the ARM-based single-board computers (SBCs) that are used in IoT run Linux and can run Docker, making cross-building, testing, and reliable deployment much easier. In other cases the IoT device is much more limited, has hardware dependencies that are hard to mock for testing, or use architectures that don’t have readily available containerization or virtualization technology available.

Let’s take a closer look at continuous integration and continuous delivery, with an eye toward IoT.

Continuous Integration (CI)

The process of continuously integrating new effort (i.e., code) into the codebase with some level of assurance of quality is a commonly practiced or sought-after goal in modern software development. On a higher (i.e., product) level, CI has its roots in Toyota’s Total Quality Management (TQM) and their philosophy of Kaizen: making iterative improvements to a product by testing frequently and escalating defects as early as possible.

In order to achieve and maintain truly continuous integration, a team needs to have (at least):

- Solid goal and issue tracking: if you don’t know where you’re going and have a record of where you’ve been, then you’re lost. Team goals need to be aligned with product goals.

- Functioning code management strategies: a code management system (CMS) and a branching strategy (consistency and predictability) are key.

- Reasonable code review or overview practices: be it pair programming or peer review, automatically-run static analysis tools, or live background unit testing following behavior-driven development (BDD), some means of code quality accountability is a must.

- Testing: fully-automated testing with 100% code coverage may not be practical, but it’s a worthy goal nonetheless to maximize consistency. Acceptance criteria must properly reflect use cases and market demand.

Keep in mind how with IoT the “integration” may target multiple generations of devices, each with multiple configurations, and there may be millions of them scattered around the planet.

Continuous Delivery (CD)

The process of automatically and continuously delivering or deploying new software is also necessary to fulfill those much-demanded updates in a timely manner, whether as a reaction to market demand or as a proactive action to patch a security vulnerability. On the supply side, much of CD consists of putting the automated portions of a CI processes in a repeatable and reliable pipeline, and similarly shares many tools with CI. Again, consistency is the main objective here.

CD usually provides the opportunity for a human to approve a release before a deployment can occur, even when the entire process is fully automated from end-to-end and can guarantee product quality. The approval timing may factor in elements unrelated to the software development such as legal requirements and market forces.

With cloud-based and container-based environments, automating the provisioning of resources has been made significantly easier. A container-based multi-service product can fairly easily be run and tested in a “dev environment” of a laptop while on an airplane, while with earlier technologies that was often impractical.

Before going deeper into CD practices, one must remember that the goal for CI/CD is to maximize throughput and efficiency of a product delivery pipeline. All good practices mentioned below are meant to be prioritized to a degree that makes sense for the current resource constraint(s) of your organization.

Some elements of good CD practices:

- Well-thought-out and executed environment procedures: an environment being “where” the code is executed either for testing or as the customer accesses it

- Declarative automated environment provisioning: declarative style focuses on the target state or your expectation of a provisioned environment (i.e., the “what”) rather than the mechanism (i.e., the “how”) to get there. All environments should be automated to be easily torn-down and recreated and the access to that automation should be delegated to eliminate unnecessary development and testing bottlenecks.

- Environment-independent artifacts: utilize whatever facilities the technology stack offers (i.e., containerization, dependency injection, environment parameters) to ensure that the exact code and artifacts tested in lower environments are what’s tested in higher environments and is eventually what is delivered to production with minimal alteration.

- Idempotent and parametric automation: ensure that the automation works off on a parameterized description of the target state to ensure that if the automation is executed twice or more with the same parameter values then nothing of substance or cost will happen after the first time.

- Repeatable and declarative pipelines: pipeline-as-code has several advantages over rigid and predefined pipelines including flexibility and better delegation of ownership.

- Tight coupling with CI processes: CD pipelines are often what drives the integration process, but the CI procedures must properly account for and take advantage of the CD procedures.

Maybe you noticed that essentially everything in CI/CD becomes code at some point: environment-as-code (i.e., AWS CloudFormation templates), pipeline-as-code (i.e., Jenkinsfiles), unit testing code, integration testing and mocking code, database schema change-sets, container description code (i.e., Dockerfiles), and so on. Codification is a natural aspect of automation and essential for repeatability and reliability, but it should be noted that many of these are codifying non-software-development tasks. Some of these are traditionally tasks for an “operations” team, and others are tasks commonly handled by a “testing” team. These various ownerships and points of delegation must be accounted for in planning out CI/CD procedures.

CI/CD for Serverless

Before talking about IoT CI/CD, it is worth discussing how Serverless deployment impacts the CI/CD process since a lot of IoT architecture is informed by Serverless technologies.

Serverless is a cloud technology and at the core involves running short-lived “functions” on auto-provisioned servers. There is of course a server somewhere that is executing the function, but which server and all of the related resources (network, etc.) that are needed to make it work are parts of the cloud-provider’s architecture. The real point is that there is no need to provision, bootstrap, maintain, and monitor a virtual-machines (VMs) or containers in order to get your business logic up and running. For private data centers it’s practical to provide internal Serverless functionality with container technologies such as Kubernetes and Docker, or a microVM technology such as Firecracker (which is used to make AWS Lambda work).

AWS Lambda, Google Compute Functions, and Azure Functions are all Serverless technologies. The functions themselves are generally written in a platform-independent language such as Python, Javascript, Java, or Fortran (as Google’s Kelsey Hightower demonstrated in a Kubecon keynote).

There is more to the Serverless technology stack, such as API gateway services, static file-hosting, database services, publish/subscribe message queues, and push-notification/SMS/email messaging services. A significant portion of SaaS that’s consumed by web and mobile clients can be provided using these services without (directly) provisioning a single VM or container.

This lack of provisioning and ad-hoc nature of the Serverless stack requires some alterations to the CI/CD processes:

- Environments in a Serverless stack are much lighter-weight than more traditional environments

- In particular a local development (“Dev”) environment can often be as simple as a local Docker server running on the developer’s machine.

- Mocking or recreating other Serverless services for development or testing is often as simple as running a few Docker instances in the same Docker network.

- Deployment in Serverless is generally much simpler than deployment into VMs or containers, but it’s important to maintain versioning utilizing the cloud provider’s architecture and keep it closely synced with your internal versioning and CI/CD pipeline.

- Testing is generally made easier thanks to container technologies, as it’s generally fairly simple to recreate an entire Serverless environment using Docker, either on a dev machine or as part of the CD build pipeline.

Serverless technology relies heavily on container technology, and having a good grasp of Docker will help significantly with development and testing. AWS for example provides the Serverless Application Model(SAM) tools to locally execute and test your functions, including mocking an API gateway. Separately, you can run DynamDB locally including in a Docker container.

One aspect of Serverless technology in particular that’s important to understand is that since a Serverless function is not running constantly (IOW, it’s not like a daemon), some eventmust trigger the Serverless system to provision and execute the function, and often that event informs how the result of the function is interpreted. For example, if an API gateway triggered a function due to an incoming request event then the event information is passed to the function and the result of the function is passed back to the client from the API gateway.

Enterprise IoT CI/CD

Resource Provisioning

With a cloud-based technology that provides virtualized compute resources, any necessary resources must first be provisioned or be configured to react to just-in-time scaling events. Ideally, when those resources are no longer necessary, they must be properly released.

With Serverless cloud technology the provisioning is minimal, and is mostly concerned with providing the code to execute and configuring what triggers that code.

IoT architecture sits somewhere in between, as the resources of the devices are effectively already “provisioned,” but need to be configured with the code to execute and what triggers that code.

Environments

In traditional development it’s common to have several environments that are known and predictable, even when they are ephemeral parts of a CD pipeline. Deployment of updates to a SaaS may involve some ramp-up or allow users to test a new UI, but is still often a blue/green type of affair where there are two options: previous and next.

For IoT development, most of your production environment is the devices themselves. If the device is a consumer product, then eventually there will be several generations of the product to support. Depending on the hardware, each of those generations may need a different build of the firmware.

Testing has to account for multiple generations of hardware with multiple firmwares, the flaws and capability limits of these, as well as simulating limited or unreliable internet connections or other less-than-ideal working environments. In some cases, these may involve physical environment edge-cases such as the xenon death-flash. (Hardware is hard!)

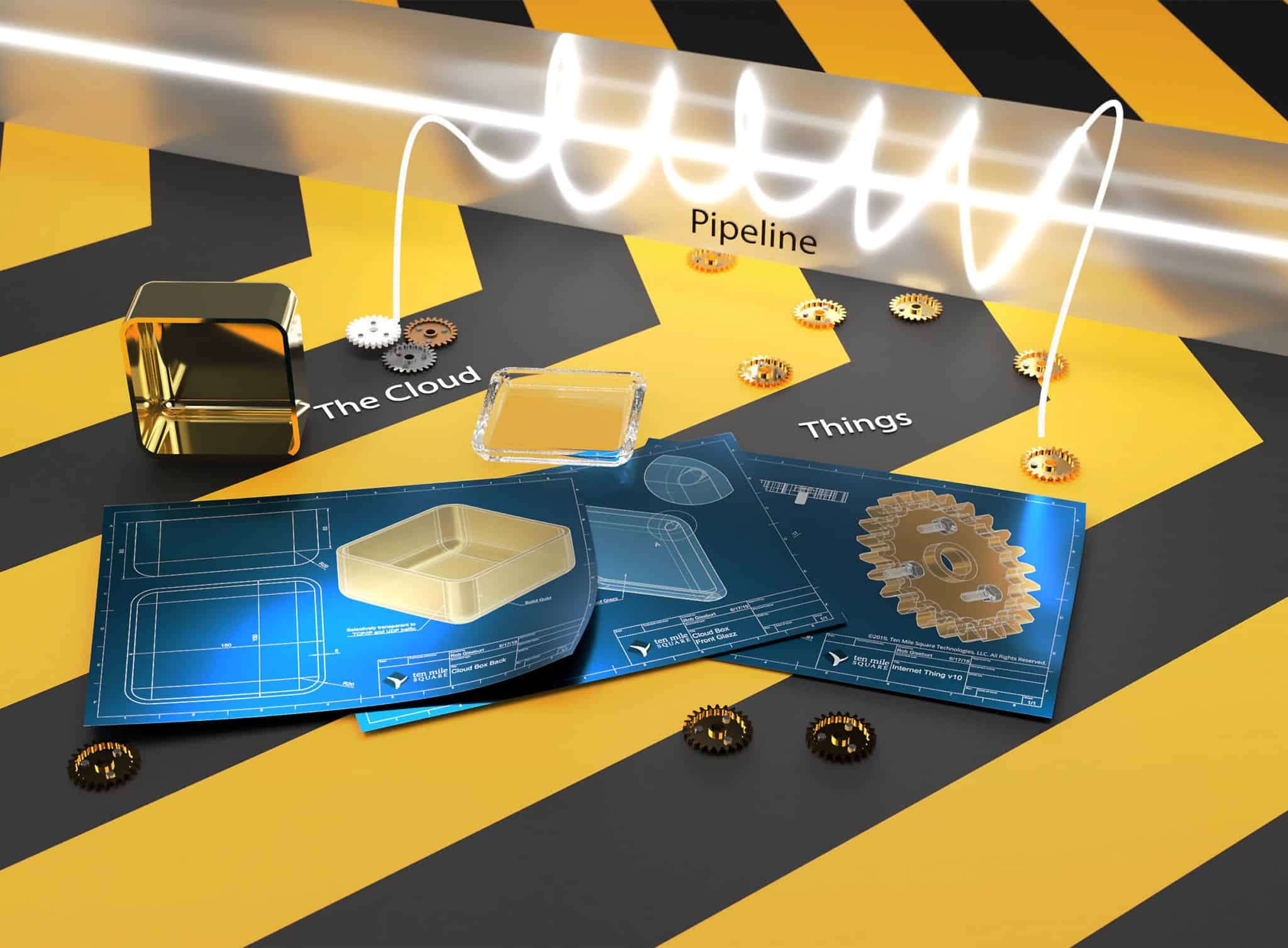

Pipelines and Assembly Lines

A hardware manufacturing assembly line is a physical manifestation (or one may argue the original) of a CI/CD pipeline. All of the phases of a software pipeline (compiling, unit testing, dependencies, packaging, integration testing, etc.) are present in a hardware assembly pipeline, they just look different.

One major difference is that in a hardware CI/CD pipeline every “build” that passes the tests is then deployed to a customer. And there’s more room for error when working with hardware, and then any number of things can happen after the device is packaged and shipped.

The hardware testing not only verifies the quality of the product but also records a snapshot of the state of the product before it leaves the factory. This is why it is vital that the data generated from the hardware tests must be uniquely associated with the device and stored as the device’s initial cloud footprint. The test process (hardware, software, and manual processes) should be fine-grained, versioned, and recorded meticulously. If a test failed once but was then retested and passed (perhaps after a minor repair), that needs to be recorded no matter if the product is shipped or scrapped. (One is for customer support and the other is for process analysis and improvement. Data is data.) Tests that have acceptable ranges of results need to have the result recorded along with pass/fail status, in case the acceptable range is revised in later versions of the test.

The unboxing and initial setup process often involves the association of the device with a specific customer or user and then attaching that device to cloud resources. These cloud resources may also need provisioned. The associated user may own or control some of these related cloud resources. When the device is retired, these cloud resources should be released if possible.

Often times the use of the device is dependent on payment of a subscription to the seller of the device, so the device or the related cloud services may be disabled upon lack of payment.

Depending on the product, it may not be practical or popular to automatically push out updates to the products. Some customer’s business needs critically depend on the device; so as long as the device is functioning “well enough” they may not wish to get a new update for minor bug fixes and new features. In some cases, the customers may actually become upset and seek alternative products if forced to update.

It is vital to determine, as soon as possible, whether the target users of these devices will tolerate automatic updates, if they can be expected to manually run updates in a reasonably timely manner, or should they only be expected to run updates manually in cases of sever bugs or major new feature updates.

For example, in the case of a critical-path device for a small business, they may avoid updates except for massive bugs. Conversely, in the case of a device that is standard issue for an enterprise fleet (i.e., the tablet used by a delivery person), updates may be mandated globally and fully under your control.

Depending on what’s determined, it may be necessary to support not only the older devices but also the older software or firmware versions on those older devices in related services. Careful planning and testing of backward compatibility is a must in any case.

Microclouds

If multiple devices are commonly deployed into the same facility or multiple devices are packaged as a single unit, then small local “clouds” (microclouds) such as AWS Greengrass can be utilized to ease deployment of these devices. By having service discovery, message handling, and configuration syncing locally the microcloud is effectively decoupled from the external cloud, isolating the devices of the microcloud from any connectivity concerns. As an example, in a small business office with multiple mission-critical devices, if the office internet connection becomes unstable, then the devices can no longer reliably coordinate via The Cloud. With a microcloud in the office, these critical devices can continue to speak to each other and resume synchronization when the internet connection comes back up.

Additionally, by utilizing the same infrastructure-as-code technologies (i.e., CloudFormation) the microclouds can be configured and maintained alongside the rest of the cloud environment seamlessly.

Devices within a microcloud can communicate with each other reguardless of internet connectivity status, and the microcloud can contain bridge devices that extend traditional networking (i.e., ethernet, Wi-Fi) with wireless protocols that enable less-capable devices (i.e., ZigBee, Bluetooth, 6LoWPAN), or even direct wired connections (i.e., I²C, 1-wire, UART).

Microclouds can also act in a serverless capacity, for example AWS Greengrass Core can execute Lambdas that are either traditional on-demand or (unique to Greengrass) long-running much like a daemon. Technically these lambda can utilize local resources such as direct access to hardware. However, without specific tooling support for intelligent multi-architecture deployment this quickly becomes an exercise in having a CloudFormation template (or parameter set) for every possible deployment target configuration and can become unmanageable quickly.

Luckily, another feature of AWS Greengrass is the IoT Jobs functionality, which, along with a Lambda “Job-runner” can be used to run predetermined tasks, including updating firmware or software for attached devices.

Moving forward

So far we have been discussing general IoT concerns and possible solutions from a pretty high level. In the following articles we will dive deep and start to get our hands dirty.